Archive for the ‘jisclms’ Category

AquabrowserUX Final Project Post

Posted on: October 20, 2010

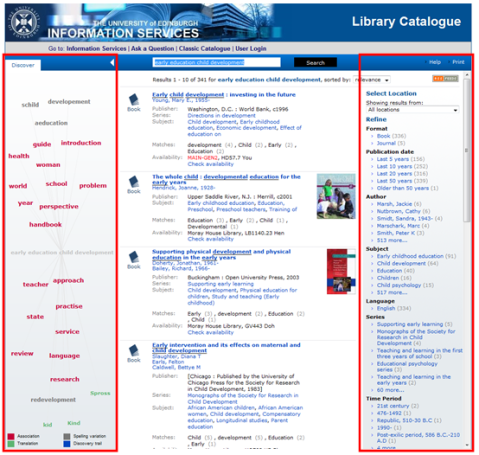

Screen shot of University of Edinburgh’s AquaBrowser with resource discovery services highlighted.

Background

The aim of the AquabrowserUX project was to evaluate the user experience of AquaBrowser at the University of Edinburgh (UoE). The AquaBrowser catalogue is a relatively new digital library service provided at UoE alongside the Classic catalogue provided via Voyager which has been established at the university for a number of years. A holistic evaluation was conducted throughout with a number of activities taking place. These included a contextual enquiry of library patrons within the library environment, stakeholder interviews for persona creation and usability testing.

Intended outcome(s)

The objectives of the project were three-fold:

- To undertake user research and persona development. Information gathered from the contextual enquiry and stakeholder interviews were used to create a set of personas which will benefit the project and the wider JISC community. The methodologies and processes used were fully documented in the project blog.

- To evaluate the usefulness of resource discovery services. Contextual enquiry was conducted to engage a broader base of users. The study determined the usefulness on site and off site which will provide a more in-depth understanding of usage and behavioural patterns.

- To evaluate the usability of resource discovery systems. Using the personas derived from the user research, typical end users were recruited to test the usability of the AquaBrowser interface. A report was published which discusses the findings and makes recommendations on how to improve the usability of UoE’s AquaBrowser.

The challenge

There were a number of logistical issues that arose after the project kicked off. It became apparent that none of the team members had significant experience in persona development. In addition, the external commitments of subcontracted team members meant that progress was slower than anticipated. A period of learning to research established methodologies and processes for conducting interviews and analysing data took place. Consequently the persona development took longer than anticipated which delayed the recruitment of participants for usability testing (Obj3). The delay also meant that participants would be recruited during the summer months when the university is traditionally much quieter. This was a potential risk to recruit during this time but did not end up being problematic to the project. However the extension of time spent gathering qualitative data meant that it was not possible to validate the segmentation with quantitative data. This was perhaps too ambitious for a project of this scale.

The challenge of conducting the contextual enquiry within the library was to find participants willing to be observed and interviewed afterwards. The timing once again made this difficult as it took place during exams. The majority of people in the library at that time were focussed on one task which was to revise for exams. This meant that persuading them to spend ten minutes talking to researchers was understandably difficult. In addition to this, the type of users that were common in the library at that time were limited to those revising and whose needs were specific to a task and did not necessarily represent their behaviour at other times of the year.

Ensuring that real use data and participation was captured during the contextual enquiry was also a challenge. Capturing natural behaviour in context is often difficult to achieve and carries a risk of influence from the researcher. For example, to observe students in the library ethically it is necessary to inform subjects that they are being observed. However, the act of informing users may cause them to change their behaviour. In longitudinal studies the researcher is reliant on the participant self-reporting issues and behaviour, something which they are not always qualified to do effectively.

Recruitment for the persona interviews and usability testing posed a challenge not only in finding enough people but also the right type of people. Users from a range of backgrounds and differing levels of exposure to AquaBrowser who fulfil the role of one of the personas could be potentially difficult and time-consuming to fulfil. As it turned out, recruitment of staff (excluding librarians) proved to be difficult and was something that we did not manage to successfully overcome.

Established practice

Resource discovery services for libraries have evolved significantly. There is an increasing use of dynamic user interface. Faceted searching for example provides a “navigational metaphor” for boolean search operations. AquaBrowser is a leading OPAC product which provides faceted searching and new resource discovery functions in the form of their dynamic Word Cloud. Early studies have suggested a propensity of faceted searching to result in serendipitous discovery, even for domain experts.

Closer to home, The University of Edinburgh library have conducted usability research earlier this year to understand user’s information seeking behaviour and identify issues with the current digital service in order to create a more streamlined and efficient system. The National Library of Scotland has also conducted a website assessment and user research on their digital library services in 2009. This research included creating a set of personas. Beyond this, the British Library are also in the process of conducting their own user research and persona creation.

The LMS advantage

Creating a set of library personas benefits the University of Edinburgh and the wider JISC community. The characteristics and information seeking behaviour outlined in the personas have been shown to be effective templates for the successful recruitment of participants for user studies. They can also help shape future developments in library services when consulted during the design of new services. The persona hypothesis can also be carried to other institutes who may want to create their own set of personas.

The usability test report highlights a number of issues, outlined in Conclusions and Recommendations, which the university, AquaBrowser and other institutions can learn from. The methodology outlined in the report also provides guidance to those conducting usability testing for the first time and looking to embark on in-house recruitment instead of using external companies.

Key points for effective practice

- To ensure realism of tasks in usability testing, user-generated tasks should be created with each participant.

- Involve as many stakeholders as possible. We did not succeed in recruiting academic staff and were therefore unable to evaluate this user group however, the cooperation with Information Services through project member Liza Zamboglou did generate positive collaboration with the library during the contextual enquiry, persona interviews and usability testing.

- Findings from the user research and usability testing suggest that resource discovery services provided by AquaBrowser for UoE can be improved in order to be useful and easy to operate.

- Looking back over the project and the methods used to collect user data we found that contextual enquiry is a very useful method of collecting natural user behaviour when used in conjunction with other techniques such as interviews and usability tests.

- The recruitment of participants was successful despite the risks highlighted above. The large number of respondents demonstrated that recruitment of students is not difficult when a small incentive is provided and can be achieved at a much lower cost than if a professional recruitment company had been used.

- It is important to consider the timing of any recruitment before undertaking a user study. To maximise potential respondents, it is better to recruit during term time than between terms or during quieter periods. Although the response rate during the summer was still sufficient for persona interviews, the response rate during the autumn term was much greater. Academic staff should also be recruited separately through different streams in order to ensure all user groups are represented.

Conclusions and recommendations

Overall the project outcomes from each of the objectives have been successfully delivered. The user research provided a great deal of data which enabled a set of personas to be created. This artifact will be useful to UoE digital library by providing a better understanding of its users. This will come in handy when embarking on any new service design. The process undertaken to create the personas was also fully documented and this in itself is a useful template for others to follow for their own persona creation.

The usability testing has provided a report (Project Posts and Resources) which clearly identifies areas where the AquaBrowser catalogue can be improved. The usability report makes recommendations that if implemented has potential to improve the user experience of UoE AquaBrowser. Based on the findings from the usability testing and contextual enquiry, it is clear that the contextual issue and its position against the other OPAC (Classic) must be resolved. The opportunity for UoE to add additional services such as an advanced search and bookmarking system would also go far in improving the experience. We recommend that AquaBrowser and other institutes also take a look at the report to see where improvements can be made. Evidence from the research found that the current representation of the Word Cloud is a big issue and should be addressed.

The personas can be quantified and used against future recruitment and design. All too often users are considered too late in a design (or redesign and restructuring) process. Assumptions are made about ‘typical’ users which are based more opinion than in fact. With concrete research behind comprehensive personas it is much easier to ensure that developments will benefit the primary user group.

Additional information

Project Team

- Boon Low, Project Manager, Developer, boon.low@ed.ac.uk – University of Edinburgh National e-Science Centre

- Lorraine Paterson, Usability Analyst, l.paterson@nesc.ac.uk – University of Edinburgh National e-Science Centre

- Liza Zamboglou, Usability Consultant, liza.zamboglou@ed.ac.uk – Senior Manager , University of Edinburgh Information Services

- David Hamill, Usability Specialist, web@goodusability.co.uk – Self-employed

Project Website

- Aggregation of project blogs: http://pipes.yahoo.com/pipes/pipe.run?_id=5c4f2c6809c9b91018d8e74eb2dfd366

- Project Wiki: https://www.wiki.ed.ac.uk/display/UX2/AquaBrowserUX

PIMS entry

Project Posts and Resources

Project Plan

- AquabrowserUX project IPR

- User Study of AquaBrowser and UX2.0

- Usefulness of Project Outputs (Studies, UCD)

- AquabrowserUX Project Budget

- AquabrowserUX Project Team and User Engagement

- AquabrowserUX Projected Timeline, Work plan and Project Methodology

Conference Dissemination

User Research and Persona Development (Obj1)

- Persona Creation: Data Gathering Evaluation

- User Research and Persona Creation Part 1: Data Gathering Methods

- User Research and Persona Creation Part 2: Segmentation – Six Steps to our Qualitative Personas

- User Research and Persona Creation Part 3: Introducing the Personas

- Audio files from each participant interview [link forthcoming once sound files are converted to suitable format]

- Personas: http://bit.ly/digitallibrarypersonas

Usefulness of Resource Discovery Services (Obj2)

Usability of Resource Discover Services (Obj3)

- Recruitment evaluation and screening for personas

- Realism in testing with search interfaces

- Usability Test Report – The University of Edinburgh AquaBrowser by David Hamill http://bit.ly/aquxusabilityreport

- Usability testing highlight videos (x3):

AquabrowserUX Final Project Post

- Final Project Post (this blog post)

- In: AquaBrowserUX | Evaluation | inf11 | jisclms | UX2

- 1 Comment

Now that the usability testing has been concluded, it seemed an appropriate time to evaluate our recruitment process and reflect on what we learned. Hopefully this will provide useful pointers to anyone looking to recruit for their own usability study.

Recruiting personas

As stated in the AquabrowserUX project proposal (Objective 3), the personas that were developed would help in recruiting representative users for the usability tests. Having learned some lessons from the persona interview recruitment, I made a few changes to the screener and added a some new questions. The screener questions can be seen below. The main changes included additional digital services consulted when seeking information such as Google|Google Books|Google Scholar|Wikipedia|National Library of Scotland and an open question asking students to describe how they search for information such as books or journals online. The additional options reflected the wider range of services students consult as part of their study. The persona interviews demonstrated that these are not limited to university services. The open question had two purposes; firstly it was able to collect valuable details from students in their own words which helped to identify which persona or personas the participant fitted. Secondly it went some way to revealing how good the participant’s written English was and potentially how talkative they are likely to be in the session. Although this is no substitute for telephone screening, it certainly helped and we found that every participant we recruited was able to talk comfortably during the test. As recruitment was being done by myself and not outsourced to a 3rd person, this seemed the easiest solution at the time.

When recruiting personas the main things I was looking for was the user’s information seeking behaviour and habits. I wanted to know what users typically do when looking for information online and the services they habitually use to help. The questions in the screener were designed to identify these things while also differentiate respondents into one type of (but not always exclusive) persona.

Screener Questions

The user research will be taking place over a number of dates. Please specify all the dates you will be available if selected to take part

26th August |27th August | 13th September | 14th September

What do you do at the university?

Undergraduate 1st |2nd |3rd | 4th | 5th year| Masters/ Post-graduate | PhD

What is your program of study?

What of the following online services do you use when searching for information and roughly how many hours a week do you spend on each?

Classic catalogue | Aquabrowser catalogue | Searcher | E-journals | My Ed | Pub Med | Web of Knowledge/Science | National Library of Scotland | Google Books | Google Scholar | Google | Wikipedia

How many hours a week do you spend using them?

Never|1-3 hours|4-10 hours|More than 10 hours

How much time per week do you spend in any of Edinburgh University libraries?

Never|1-3 hours|4-10 hours|More than 10 hours

Tell me about the way you search for information such as books or journals online?

Things we learned

There were a number of things that we would recommend to do when recruiting participants which I’ve listed below:

- Finalise recruitment by telephone, not email. Not surprisingly, I found that it’s better to finalise recruitment by telephone once you have received a completed screener. It is quicker to recruit this way as you can determine a suitable slot and confirm a participant’s attendance within a few minutes rather than waiting days for a confirmation email. It also provides insight into how comfortable the respondent is when speaking to a stranger which will affect the success of your testing.

- Screen out anyone with a psychology background. It is something of an accepted norm amongst professional recruitment agencies but something which I forgot to include in the screener. In the end I only recruited one PhD student with a Masters in psychology, so did not prove much of a problem in this study. Often these individuals do not carry out tasks in the way they would normally do, instead examining the task and often trying to beat it. This invariably can provide inaccurate results which aren’t always useful.

- Beware of participants who only want to participate to get the incentive. They will often answer the screener questions in a way they think will ensure selection and not honestly. We had one respondent who stated that they used every website listed more than 10 hours a week (the maximum value provided). It immediately raised flags and consequently that person was not recruited.

- Be prepared for the odd wrong answer. On occasion, we found out during the session that something the participant said they had used in the past they hadn’t seen before and vice versa. This was particularly tricky because often students aren’t aware of Aquabrowser by name and are therefore unable to accurately describe their use of it.

Useful resources

For more information on recruiting better research participants check out the article by Jim Ross on UX Matters: http://www.uxmatters.com/mt/archives/2010/07/recruiting-better-research-participants.php. There is also a similarly useful article by Abhay Rautela on Cone Trees with tips on conducting your own DIY recruitment for usability testing: http://www.conetrees.com/2009/02/articles/tips-for-effective-diy-participant-recruitment-for-usability-testing/.